Troubleshooting Logstash

Logstash is our log parser and shipper that gets logs and writes them to the elasticsearch database which creates a daily or weekly index depending on your configuration.

If you are not receiving logs in Kibana, it might be one of the following problems.

- Elasticsearch node is having problems (Refer to our earlier guide on troubleshooting elasticsaerch)

- Logstash server has ran out of disk space

- Logstash plugin is failing – This will prevent logstash from parsing files.

- CPU usage is high and no logs are being processed.

Logstash Issue # 1: Low disk space

Run the following command:

df –h

If you have 100% usage, logstash will not work.

The most likely scenario is that the log folder is filled with error or other related logs in your logstash server.

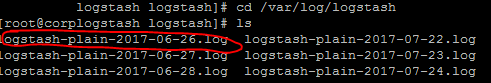

You can run the following to check the log folder which is located in the /var/log/logstash/ folder

du -h /var/log/logstash/

![]()

If the log folder is the culprit of the low disk space, you may delete the logs within the folder:

cd /var/log/logstash ls

Once you see which files are taking the most space, you may delete them individually.

Example: rm –rf logstashLogName.log

rm -rf logstash-plain-2017-06-26.log

Logstash Issues # 2: Other error messages

Another reason why you might not be seeing logs in elasticsearch is that Logstash might be having problems with a plug-in that you are currently using, or might have problems parsing in general.

Logstash logs are copied to /var/log/logstash which are essential for troubleshooting purposes.

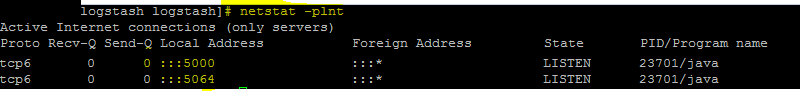

To identify if Logstash is working and listening on the specific port you’ve specified you may run the following:

Netstat –plnt

Verify that your ports are running. If you don’t see your port running, perform the following to see what kind of error messages we are getting.

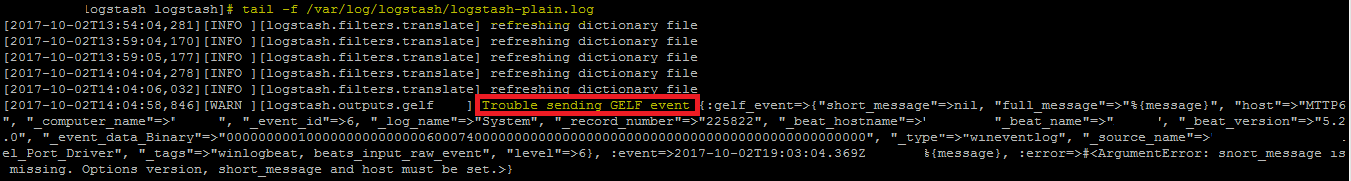

tail -f /var/log/logstash/logstash-plain.log

You should see the errors related to Logstash, which might be related to:

- Plug-in error – Issues with Plugins such as (GeoMapping, CIDR, etc)

- GROK patters – You might have to inspect your GROK patterns to ensure they work.

- Connectivity issues to the Elasticsearch ES instance – Dead Elasticsearch instances

- Too many opened connections

You may simply restart the logstash service.

service logstash restart

If simply restarting this doesn’t fix it, then take a look at the error messages and manually resolve the problem.

Thank you.